-

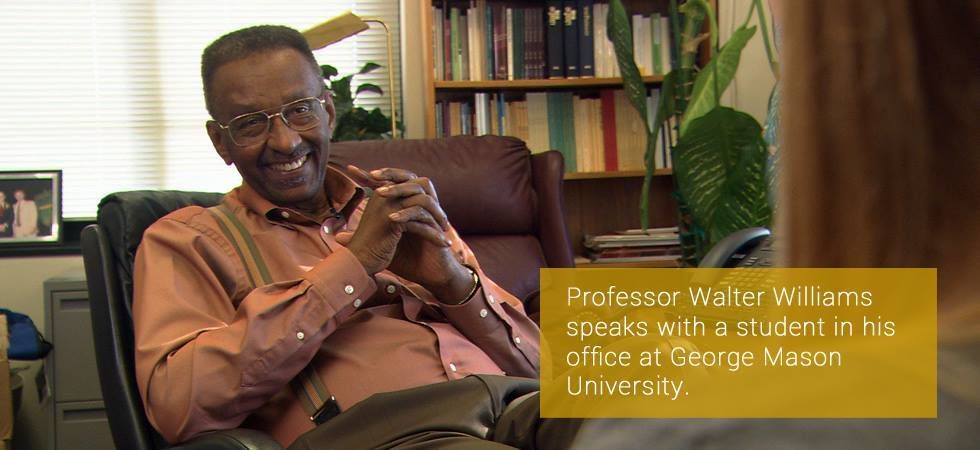

A Lion of a Scholar, a Lamb of a Man: Rest in Peace, Walter E. Williams

Walter Williams passed last night or this morning. I spend the first hour of the morning crying, gave my course lecture with dry eyes, then cried some more. My first “paper talk” was in Prof Williams’ PhD micro course, discussing a Demsetz paper. I bombed terribly, nervous, over-prepared, drawing a panicked blank. Prof Williams…

-

What’s the difference between game theory and agent-based modeling?

The following was written as a response to a Quora question, linked here. Game theory is a framework for strategic interaction developed so that players can generally “solve” for some strategy or mix of strategies that optimizes utility, profit, payoffs, or some other indicator of well-being. Even in complicated games, the idea is that players…

-

How to Start Publishing in Economics as a Grad Student: A Few (Hopefully) Helpful Tips

In my third year of my PhD in economics, I wasn’t sure what to do with the considerable glut of first drafts I’d accumulated by writing papers for classes and conferences. I had something like eight papers I’d categorize as good first drafts, some rougher than others. I knew what it took to write a…

-

An Ecologically Rational Analysis of Nudge Theory

Here’s a short essay on how nudge theory would be interpreted in an ecologically rational frame. This essay is a bit of a teaser for a paper I’m working on about nudge theory and its parallels to the socialist calculation debate. Enjoy! 1. Introduction In 2008, Chicago economist Richard Thaler and Harvard Law School Professor…

-

My problem with Kirznerian surprise

The problem I see with Kirznerian “surprise,” as Kirzner elaborates on in his “Entrepreneurial Discovery and the Competitive Market Process,” (1997, pp. 71-2) is that his exposition of the nature of surprise makes the process of entrepreneurial discovery rather mystical, dependent on some inscrutable quality called “alertness.” Yes, people do not search the neoclassical way….

-

Published in Public Choice online: My book review of Colander and Kupers “Complexity and the Art of Public Policy”

My book review of Colander and Kupers “Complexity and the Art of Public Policy” has been published online in the journal Public Choice. http://link.springer.com/article/10.1007/s11127-016-0344-5 This is my first economics publication. You might recognize the review from an earlier blog post of mine, though the published review is more polished and detailed.

-

Book Review: Complexity and the Art of Public Policy

In Complexity and the Art of Public Policy, David Colander and Roland Kupers introduce the “complexity frame” of policy-making, and argue for bottom-up policy-making like nudging individuals to make decisions more in line with the goals of the public choosers. In Part 1, “The Complexity Frame for Policy,” Colander and Kupers reduce the old policy…

-

A Conless Macroeconomics?

I’m reading Vela Velupillai’s “Variations on the Theme of Conning in Mathematical Economics” (2007), which is one of his many contributions on the subject of the pathologies introduced into economic theorizing by insisting on the sole use of axiomatic mathematics (this is the kind of mathematics we think of when we think of mathematics, whereby…

Abigail Devereaux

Economist & Writer

Follow Me On Instagram

@WillamSmith